Tasks 👩💻👨💻¶

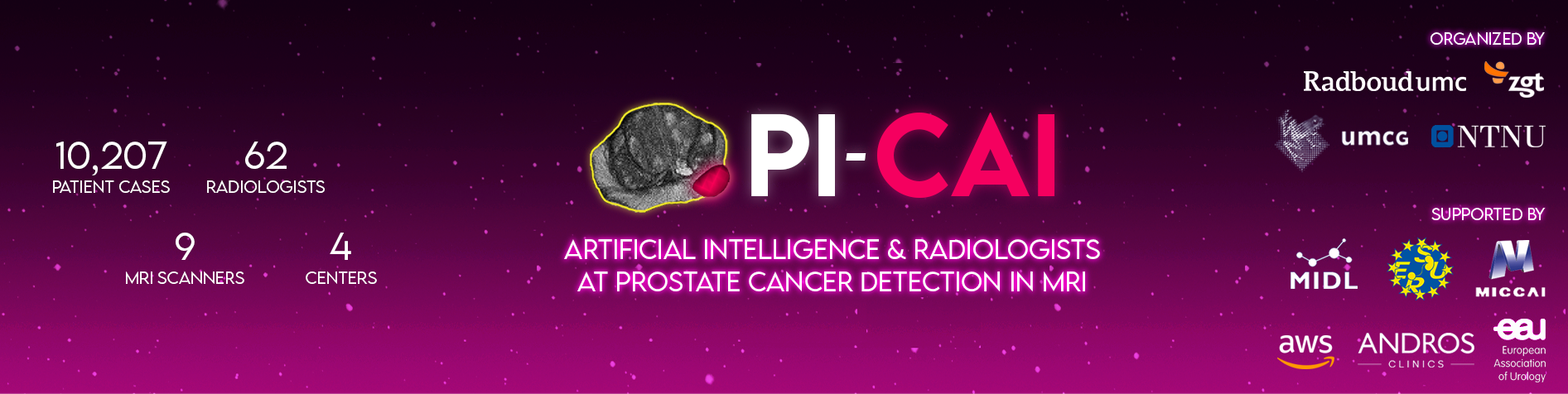

The PI-CAI: AI Study (grand challenge) aims to evaluate the performance of modern AI algorithms at patient-level diagnosis and lesion-level detection of csPCa (ISUP ≥ 2 cancer) in bpMRI. Similar to radiologists, the objective of AI is to read bpMRI exams (imaging + clinical variables), produce clinically significant lesion detections, their likelihood scores of harboring csPCa, and an overall patient-level score for csPCa diagnosis.

¶

¶

¶

Figure. (top) Lesion-level csPCa detection (modeled by 'AI'): For a given patient case, using the bpMRI exam (and optionally all clinical/acquisition variables), predict a 3D detection map of non-overlapping, non-connected csPCa lesions (with the same dimensions and resolution as the T2W image). For each predicted lesion, all voxels must comprise a single floating point value between 0-1, representing that lesion’s likelihood of harboring csPCa. (bottom) Patient-level csPCa diagnosis (modeled by 'f(x)'): For a given patient case, using the predicted csPCa lesion detection map (and optionally all clinical/acquisition variables), compute a single floating point value between 0-1, representing that patient’s overall likelihood of harboring csPCa. For instance, f(x) can simply be a function that takes the maximum of the csPCa lesion detection map, or it can be a more complex heuristic (defined by the AI developer).

¶

We require detection maps as the model output (rather than softmax predictions), so that we can definitively evaluate object/lesion-level detection performance using precision-recall (PR) and free-response receiver operating characteristic (FROC) curves. With volumes of softmax predictions, there's a lot of ambiguity on how this can be handled —e.g. what is the overall single likelihood of csPCa per predicted lesion, what constitutes as the spatial boundaries of each predicted lesion, and in turn, what constitutes as object-level hits (TP) or misses (FN) as per any given hit criterion?¶

Similar to clinical practice, PI-CAI mandates coupling the tasks of lesion detection and patient diagnosis to promote interpretability and disincentivize AI solutions that produce inconsistent outputs (e.g. a high patient-level csPCa likelihood score without any significant csPCa detections, and vice versa). Organizers will provide end-to-end baseline solutions, adapted from the standard U-Net (Ronneberger et al., 2015), the nnU-Net (Isensee et al., 2021) and the nnDetection (Baumgartner et al., 2021) models in a GitHub repo. Preprocessing scripts for 3D medical images, geared towards csPCa detection in MRI:github.com/DIAGNijmegen/picai_prep/ Baseline AI models for 3D csPCa detection/diagnosis in bpMRI: github.com/DIAGNijmegen/picai_baseline¶

Evaluation📊¶

Performance Metrics¶

Patient-level diagnosis performance is evaluated using the Area Under Receiver Operating Characteristic (AUROC) metric. Lesion-level detection performance is evaluated using the Average Precision (AP) metric. Overall score used to rank each AI algorithm is the average of both task-specific metrics:¶

Overall Ranking Score = (AP + AUROC) / 2

Free-Response Receiver Operating Characteristic (FROC) curve is used for secondary analysis of AI detections (as recommended in Penzkofer et al., 2022). We highlight the performance on the FROC curve using the SensX metric. SensX refers to the sensitivity of a given AI system at detecting clinically significant prostate cancer (i.e., Gleason grade group ≥ 2 lesions) on MRI, given that it generates the same number of false positives per examination as the PI-RADS ≥ X operating point of radiologists. Here, by radiologists, we refer to the radiology readings that were historically made for these cases during multidisciplinary routine practice. For instance, if you refer to the FROC curve in the top-right corner of Fig 4. in Bosma et al., 2023, the Report-guided SSL model has a Sens5 of around 0.62, Sens4 of around 0.75 and Sens3 of around 0.78. Across the PI-CAI testing leaderboards (Open Development Phase - Testing Leaderboard, Closed Testing Phase - Testing Leaderboard), SensX is computed at thresholds that are specific to the testing cohort (i.e., depending on the radiology readings and set of cases). While it doesn't make sense to compute this metric for a different dataset using the exact same thresholds, you can compute this general metric (i.e., lesion detection sensitivity at a given false positives per examination rate) using the picai_eval repo as shown here (using metrics.lesion_TPR_at_FPR).¶

Intersection over Union (IoU) on its own is not used for evaluating detection or diagnostic performance, given that IoU is ill-posed to accurately validate these tasks (Reinke et al., 2022).

¶

Hit Criterion for Lesion Detection¶

A “hit criterion” is a condition that must be satisfied for each predicted lesion to count as a hit or true positive. For csPCa detection in recent prostate-AI literature, hit criteria have been typically fulfilled by achieving a minimum degree of prediction-ground truth overlap, by localizing predictions within a maximum distance from the ground-truth, or on the basis of localizing predictions to a specific region (as defined by sector maps).¶

For the 3D detections predicted by AI, we opt for a hit criterion based on object overlap:

-

True Positives: For a predicted csPCa lesion detection to be counted as a true positive, it must share a minimum overlap of 0.10 IoU in 3D with the ground-truth annotation. Such a threshold value, is in agreement with other lesion detection studies from recent literature (Bosma et al., 2023, Duran et al., 2022, Baumgartner et al., 2021, Saha et al., 2021, Hosseinzadeh et al., 2021, McKinney et al., 2020, Jaeger et al., 2019).¶

-

False Positives: Predictions with no/insufficient overlap count towards false positives, irregardless of their size or location.¶

-

Edge Cases: When there are multiple predicted lesions with sufficient overlap (≥ 0.10 IoU), only the prediction with the largest overlap is counted, while all other overlapping predictions are discarded. Predictions with sufficient overlap that are subsequently discarded in such a manner, do not count towards false positives to account for split-merge scenarios.¶